Highly Available Storage (HAST) framework, allows transparent storage of the same data across several physically separated machines connected by a TCP/IP network. High availability is one of the main requirements in serious business applications and highly-available storage is a key component in such environments.

The following are the main features of HAST:

- Can be used to mask I/O errors on local hard drives.

- File system agnostic as it works with any file system supported by FreeBSD.

- Efficient and quick resynchronization as only the blocks that were modified during the downtime of a node is synchronized.

- Can be used in an already deployed environment to add additional redundancy.

- Together with CARP, Heartbeat, or other tools, it can be used to build a robust and durable storage system.

HAST Configuration

In this document, we are going to see on how to replicate the zfs volume (zvol) of same size using HAST. Main source is from https://www.freebsd.org/doc/handbook/disks-hast.html . Configuring HAST is very simple process which involves a configuration file (hast.conf), hast utility tool (hastctl) and a hastd daemon process which provides data synchronization between ‘Master’ and ‘Secondary’ node.

Configuration is done using /etc/hast.conf file and this file should be identical on both the nodes. I have written a shell script which will automatically configure the hast in master and secondary nodes according to the provided values. Also make sure the same IP address is added as a carp IP in both the nodes. To know more about the CARP IP configuration, please refer the FreeBSD article https://www.freebsd.org/doc/handbook/carp.html .

Note: Before running the hastconf.sh script, make sure master node RSA public key is added as authorized key in the secondary node so that, master node can transfer file to the secondary node without password via scp.

Script can be downloaded from the git link https://gitlab.com/freebsd1/hast . Instructions on how to run this script is at ‘README’ file.

During execution of hastconf.sh, few values should be passed to create hast.conf file in both the nodes,

# ./hastconf.sh <remote_host> <remote_ip> <carp_ip> <remote_poolname> <vol_path> <vol_size> <hastvol_name>

Where,

- remote_host => hostname of the secondary node

- remote_ip => IP address of the secondary node

- carp_ip => Shared carp IP used for failover

- remote_poolname => Pool name created already in secondary node

- vol_path => zvol created in primary node, have to provide the full path of it.

- vol_size => size of the created zvol in primary node, such as 15G/50G.

- hastvol_name => User defined name for the hast volume.

After this, should be able to see the configuration file at /etc/hast.conf in both the nodes and this file WILL be identical.

Failover Configuration with CARP

In order to achieve the robust storage system which is resistant to the failure of any given node. If the primary node fails, the secondary node is there to take over seamlessly, check and mount the file system, and continue to work without missing a single bit of data. To achieve this, CARP (Common Address Redundancy Protocol) is used along with carp switch script is used to automatically make the secondary node as primary when the primary node fails.

Copy the content of this file https://gitlab.com/freebsd1/hast/-/blob/master/devd-conf.txt in to the file /etc/devd.conf and then copy the script ‘carp-hast-switch.sh’ to the directory /usr/local/sbin/ and then restart the devd service.

# service devd restart

Note: The above mentioned step has to be followed in both the machines.

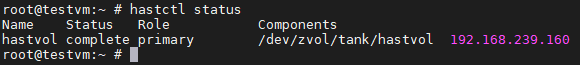

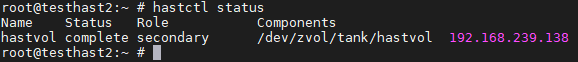

Then run the command “# hastctl status” in both the nodes and check the output which should show as below:

Node1

Node2

That’s it. ZFS volume has been successfully replicated using HAST. By using this volume /dev/hast/, one can create an iSCSI storage without any issue.

Senthilnathan