Businesses of all sizes need to ensure that web applications run smoothly and scale well in today’s digital landscape. Nevertheless, maintaining high availability and managing load balancing can be difficult. With this blog post, you will learn how to set up a HAProxy load balancer in Ubuntu 20.04 with HIGH AVAILABILITY, enabling you to deploy stable and scalable applications.

Before moving on to setting up the load balancer, it is essential to know certain things about the usage of load balancer components, Keepalived, and HAProxy.

What is a load balancer?

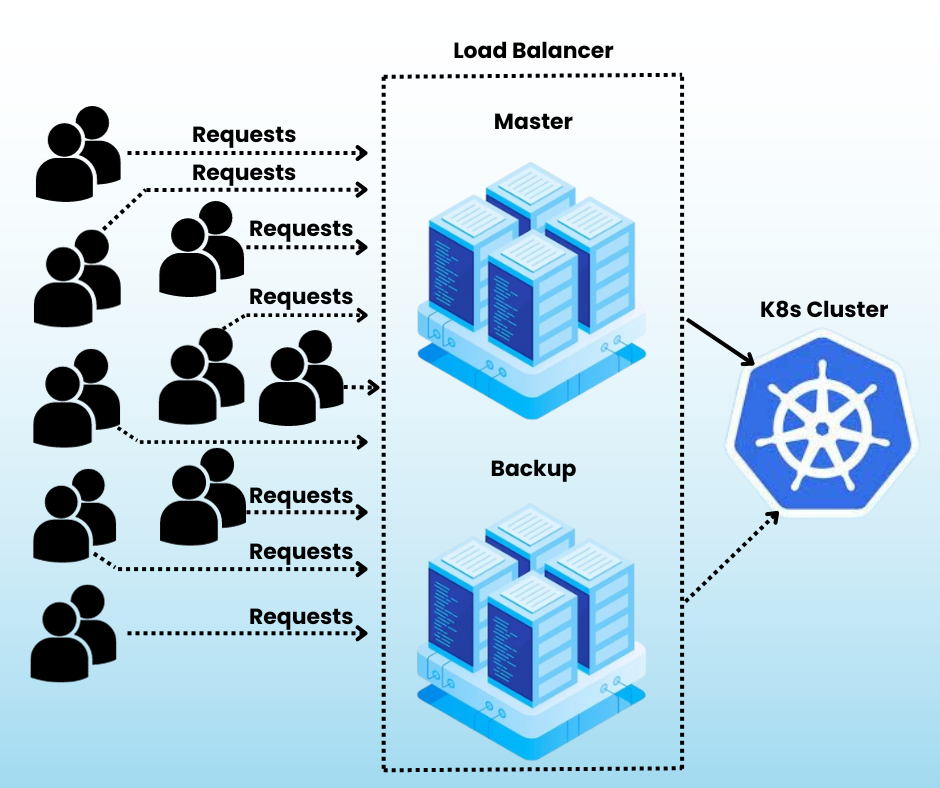

A load balancer is an essential component of Kubernetes that helps distribute incoming traffic to K8s cluster control planes. It serves as a traffic controller, ensuring that requests are processed quickly and efficiently even when numerous requests come to access the same application running as a pod in K8s.

Why do we need keepalived and HAProxy?

HAProxy and Keepalived are two open-source technologies that are frequently used to give applications load balancing and high availability.

A virtual router redundancy protocol (VRRP) daemon called Keepalived keeps track of the health of two or more servers and makes sure that at least one of them is always up and forwarding traffic. In order to do this, each server shares a virtual IP address or VIP. Keepalived is in charge of allocating the VIP to the active server and switching to a backup server if the active server fails.

A HAProxy is a load balancer that splits traffic among several servers. Reducing the load on any one server might aid in enhancing the performance of apps.

Setting Up

We are going to use a TCP load balancer (layer 4) to route the public traffic to the Kubernetes cluster. The load balancer is set up using HAProxy and KeepAlived daemons for failover.

System Requirements:

For initiating the load balancers we need two machines with the following specifications,

- Ubuntu 20.04 LTS

- 2 CPU & 4GB memory

- 30GB HDD SATA/SSD

Pre-Requisites:

Before starting up, check whether both the machines have an active public interface and a private interface on the same subnet.

Installing KeepAlived and HAProxy:

In order to install the keepalived and HAProxy software run the following commands on both machines.

# apt update –y

# apt install keepalived haproxy

Configuring KeepAlived

To ensure the proper functioning of the Keepalived daemon, one machine must be designated as the master and another one as the backup. To achieve this, create or edit the configuration file /etc/keepalived/keepalived.conf and add the following content accordingly.

Configuring master server

Now we are stepping into the important part of our task. Yes, you heard it right. We are going to configure the master server. Follow the following content so that we can configure the server with ease.

vrrp_script chk_haproxy {

script “/usr/bin/killall -0 haproxy”

interval 2

weight 2

}

# Configuration for Virtual Interface

vrrp_instance LB_VIP {

interface eth0

state MASTER # set to BACKUP on the peer machine

priority 101 # set to 100 on the peer machine

virtual_router_id 51

authentication {

auth_type AH

auth_pass myP@ssword # Password for accessing vrrpd. Same on all devices

}

unicast_src_ip 192.168.1.1 # Private IP address of master

unicast_peer {

192.168.1.2 # Private IP address of the backup haproxy

}

# The virtual ip address shared between the two loadbalancers

virtual_ipaddress {

100.xxx.xxx.xxx dev eth1 label eth1:1

}

# Use the Defined Script to Check whether to initiate a fail over

track_script {

chk_haproxy

}

}

Configuring backup server

Hurray! you had configured the Master server, now we need to configure the backup server and for that follow the following content.

vrrp_script chk_haproxy {

script “/usr/bin/killall -0 haproxy”

interval 2

weight 2

}

vrrp_instance LB_VIP {

interface eth0

state BACKUP

priority 100

virtual_router_id 51

authentication {

auth_type AH

auth_pass myP@ssword

}

unicast_src_ip 192.1681.2 # Private IP address of the backup haproxy

unicast_peer {

192.168.1.1 # Private IP address of the master haproxy

}

# The virtual ip address shared between the two loadbalancers

virtual_ipaddress {

100.xxx.xxx.xxx dev eth1 label eth1:1

}

track_script {

chk_haproxy

}

}

In this configuration file, the device eth0 is considered a private interface and eth1 is considered a public interface. The virtual IP address used here is the IP address of the Kubernetes cluster.

Starting the Daemon Service

With the joy and excitement of configuring keepalived and configuration of master & backup servers, start the daemon service using the following command.

# systemctl start keepalive

# systemctl status keepalived

Configuring HAProxy

Don’t loosen up yourself, we are about to win this race. As a part, we are about to configure the HAProxy in our server. To succeed in this run the following command and proceed further steps.

# openssl dhparam -out /etc/haproxy/dhparams.pem 2048

Now, edit the HAProxy configuration file /etc/haproxy/haproxy.cfg as follows in both servers.

global

log /dev/log local0

log /dev/log local1 notice

chroot /var/lib/haproxy

stats socket /run/haproxy/admin.sock mode 660 level admin expose-fd listeners

stats timeout 30s

user haproxy

group haproxy

daemon

# Default SSL material locations

ca-base /etc/ssl/certs

crt-base /etc/ssl/private

# See: https://ssl-config.mozilla.org/#server=haproxy&server-version=2.0.3&config=intermediate

ssl-default-bind-ciphers ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384

ssl-default-bind-ciphersuites TLS_AES_128_GCM_SHA256:TLS_AES_256_GCM_SHA384:TLS_CHACHA20_POLY1305_SHA256

ssl-default-bind-options ssl-min-ver TLSv1.2 no-tls-tickets

ssl-default-server-ciphers PROFILE=SYSTEM

ssl-dh-param-file /etc/haproxy/dhparams.pem

defaults

log global

mode HTTP

option httplog

option dontlognull

timeout connect 5000

timeout client 50000

timeout server 50000

errorfile 400 /etc/haproxy/errors/400.http

errorfile 403 /etc/haproxy/errors/403.http

errorfile 408 /etc/haproxy/errors/408.http

errorfile 500 /etc/haproxy/errors/500.http

errorfile 502 /etc/haproxy/errors/502.http

errorfile 503 /etc/haproxy/errors/503.http

errorfile 504 /etc/haproxy/errors/504.http

frontend apiserver

bind 100.xxx.xxx.xxx:6443

option tcplog

mode tcp

default_backend apiserver

backend apiserver

option httpchk GET /healthz

http-check expect status 200

mode tcp

option ssl-hello-chk

balance round-robin

server k8s-master1 <pvt-ip>:6443 check

server k8s-master2 <pvt-ip>:6443 check

server k8s-master3 <pvt-ip>:6443 check

frontend HTTP

bind 100.xxx.xxx.xxx:80,100.xxx.xxx.xxx:443

option tcplog

mode tcp

use_backend https if { dst_port 443 }

default_backend http

backend HTTP

mode tcp

balance round-robin

server k8s-master1 <pvt-ip>:31989 check send-proxy-v2

server k8s-master2 <pvt-ip>:31989 check send-proxy-v2

server k8s-master3 <pvt-ip>:31989 check send-proxy-v2

backend https

mode TCP

balance round-robin

server k8s-master1 <pvt-ip>:30640 check send-proxy-v2

server k8s-master2 <pvt-ip>:30640 check send-proxy-v2

server k8s-master3 <pvt-ip>:30640 check send-proxy-v2

In this configuration file, ports 31989 and 30640 are the node ports that are exposed from the Istio-ingress gateway service. Ensure that you provide the appropriate port numbers in the configuration file once Istio is deployed. After making the necessary changes, start the HAProxy service.

# systemctl start haproxy

# systemctl status haproxy

Next, proceed with the creation of a Kubernetes cluster using containerd as the runtime environment.

Thank you for taking the time to read our blog. This blog “How to set up a HAProxy load balancer in Ubuntu 20.04?” was written by our staff Senthilnathan. We hope you found the information valuable and insightful. If you find any issues with the information provided in this blog don’t hesitate to contact us (info@assistanz.com).

Optimize your kubernetes and never lose a valuable customer again!

Our mission is to ensure that your containers remain lightning-fast and protected at all times by monitoring and maintaining it 24×7 by our experts.

Related Post

How to create a custom namespace in the K8s environment?

How To Install K8s Dashboard? – A Simple Guide