Kubernetes Overview and Architecture

This blog provides an overview of Kubernetes and its architecture for container environments.

OVERVIEW OF KUBERNETES

- It became open-source and handed over to the Cloud Native Computing Foundation (CNCF). It’s a part of the Linux Foundation.

- It’s one of the most important open-source projects and written in GoLang.

- Google uses in-house projects like Borg and Omega for their search, Gmail, and other technologies on containers.

- The team that built Borg and Omega also built Kubernetes.

- The word kubernetes came from the Greek word helmsman. It means the person who steers the ship.

- The shortened name of kubernetes is K8s.

WHAT IS KUBERNETES?

- Containers have challenges in scalability and synchronization.

- Kubernetes is an orchestrator for microservice apps that run on containers.

- Microservices is a collection of small and independent services.

- Microservices Apps is made up of multiple services. Each is packaged as POD and each one has a job to do.

- Kubernetes organizes each service to make work seamlessly. This is called orchestration. It orchestrates all the services to work together.

- We create an application package and give it to the kubernetes cluster. The cluster will be one or more masters and a bunch of Nodes.

- Masters are in charge and they make decisions on which nodes to work on.

- Nodes are actually to do the work and report back to the cluster.

- Kubernetes is all about running and orchestration containerized apps.

KUBERNETES ARCHITECTURE

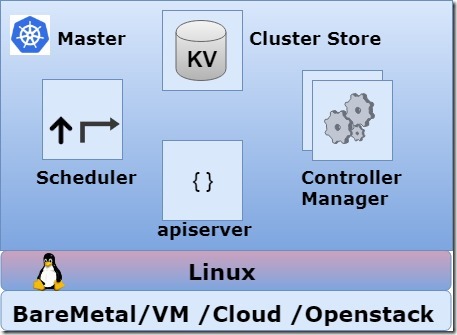

MASTERS

- Kubernetes is a bunch of Masters and nodes.

- It will run on the Linux platform. Underneath it can be either Baremetal, VM, Cloud instances, and OpenStack.

- We can have Multi-Master HA to share the workloads with multiple masters.

- Kube-API server is the front-end master control plane. It is the only master component with the proper external facing interface and exposes through REST API that consumes JSON. It will expose to the port 443.

- Cluster Store is the memory of the cluster. It stores the config and state of the cluster. It used etcd. It’s an open-source distributed Key value (KV) store. It’s developed by coreOS and uses a NoSQL database.

- Kube-Controller-Manager is the controller of controllers. It has a node controller, endpoint controller, namespace controller, etc.., It has a controller for everything in K8S. It watches for changes and maintains the Desired State.

- Kube-Scheduler watches the API server for new pods and assigns them to nodes. It also manages affinity/anti-affinity, constraints, and resource management.

KUBERNETES WORKING OVERVIEW

- The commands and queries come through the kubectl command.

- The command format will be in JSON format.

- It will reach the API server. This is where the authentication happens. Once it’s completed, based on the commands it will speak to other components like the scheduler, controller, cluster store.

- Then the commands and action items will be handed over to nodes.

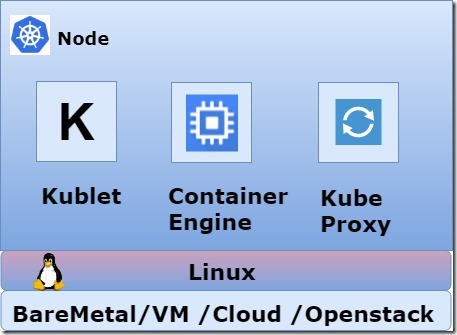

NODES

- They are called kubernetes workers. It will take the instructions from the Master Server.

- It contains Kublet, Container Runtime, and Kube Proxy.

- Kublet is a main kubernetes agent of a node. It can installed it on a Linux host. It registers the node in the kubernetes cluster and watches the API SERVER for work assignments.

- Once it receives a task, it carries out the task and reports back to the master. It also reports the kublet failures and overload issues to kubernetes master.

- Control Pane will decide what to do next. Kublet is not responsible for POD failure. It simply reports the status to the master.

- Kublet exposes the endpoint in the local host on the 10255 port.

- The Spec endpoint provides information about the node. The health endpoint will perform a health check operation. The PODS endpoint shows the current running PODS.

- Container Engine manages the container management like pulling images and starting and stopping the containers.

- The container runtime will be Docker Mostly. We can also use Core OS rkt (Rocket) on kubernetes. These are pluggable.

- Kube Proxy is the network brains of a node. It will take care of getting a unique IP address for each POD.

- It is like one IP Per POD.

- All the containers inside a POD share a single IP.

- It also took care of Load Balancing across all PODS in a service.

DECLARATIVE MODEL AND DESIRED STATE

- Kubernates operates on a Declarative Model. It means, we provide the manifest files to API server and describe how we want the cluster to look and feel.

- For example, We tell K8s that use this image and always make 5 copies of that. K8s take care of all the work such as pulling images, creating containers, assigning network, etc.., To do this, we issue to K8s with the manifest file with what we want the cluster looks like.

- Once the K8s build the environment as per the manifest file is called Desired State of the cluster.

- Sometimes, when the Actual State of the cluster drifts from the Desired State, kubernetes takes own action to restore the state.

- Kubernetes will constantly be checking the actual state of the cluster that matches with the Desired State. If these two don’t match, it will work continuously till it matches.

PODS

Containers always run inside a PODS.

We cannot deploy a container directly in kubernetes. Containers without a POD in kubernetes are called Naked Containers.

PODS can have one or multiple containers.

A POD is just a ring-fenced environment to run containers. It’s just like a SAN box with the containers in it.

Inside the POD, we create a network stack, kernel namespaces, and n number of containers.

Multiple containers will share a single POD environment. The two containers inside POD will speak to each other through their local interface.

If a couple of containers have the same memory and volume then we can place them in a single POD. This is called Tight Coupling.

If the containers are not required to be a couple, place them in a separate POD and couple them through a network. This is called Loose Coupling.

Scaling in the kubernetes can be done by adding and removing the PODS. But don’t scale more containers to an existing POD.

For Multi-container PODs, we can have two or more complimentary containers that are related to each other inside a POD. The primary container will be the main container and the secondary container will be called a sidecar container.

PODs can be scaled within a few seconds. A POD will never be declared as available until the whole POD is up and running.

We cannot have a single POD spread over multiple nodes.

The three phases of the POD life cycle are Pending, Running & succeeded (or) Failed.

When a POD dies, the replication control starts another one in its place.

We can deploy PODS directly in kubernetes through the API server using manifests file.

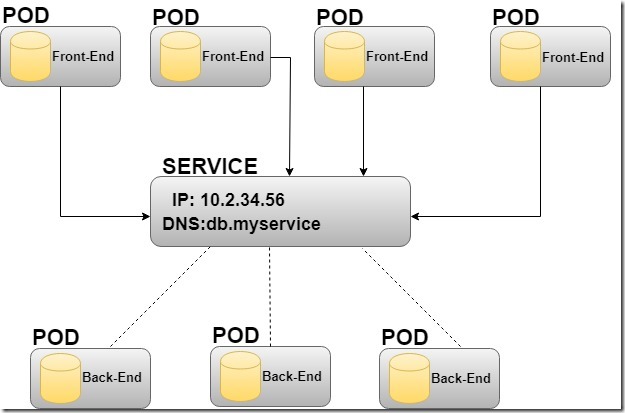

SERVICE

- Kubernetes is made up of one or more PODS. If the POD dies, it will initiate in another node with a new IP. So we cannot rely on POD IP and it’s a massive issue.

- In the above example, we have a bunch of web servers as front-end which is taking to back-end DB server.

- We place a service object in between these two ends. A service is a kubernetes object like POD (or) Deployments which we define in the manifest file. Provide the manifest file to the API server and create the service object.

- It provides a stable IP and DNS name for the DB pods.

- Using a single IP in the service it load-balance the request across the DB PODs.

- If any POD fails and creates a new one, the Service will detect and update its records automatically.

- A POD belongs to a service via Labels. Labels are the simplest and most powerful thing in kubernetes.

- Labels are used to identify the particular PODS for the load balancing.

- Services will send traffic only to healthy PODs. If the PODs fail in the health check, the service will not send traffic to them.

- Services perform load balancing on a random basis. Round Robin is supporting and it can be turned on.

- By default, it uses TCP but it supports UDP also.

DEPLOYMENTS

- Deployments are all about declarative.

- In the deployment model, the replication controller is replaced with replica sets.

- Replica sets are the next-generation replication controller.

- It has a powerful update model and simple rollbacks.

- Rolling updates are the core feature of deployments.

- We can have multiple concurrent deployment versions.

- Kubernetes will detect and stop rollouts if they are not working.

- It’s a new model in the kubernetes world.

Thank you for taking the time to read our blog on “Basic Overview of the Kubernetes and its Architecture“. We hope you found the information valuable and insightful. If you find any issues with the information provided in this blog don’t hesitate to contact us (info@assistanz.com).

Optimize your kubernetes and never lose a valuable customer again!

Our mission is to ensure that your containers remain lightning-fast and protected at all times by monitoring and maintaining it 24×7 by our experts.

Related Post

How To Install K8s Dashboard? – A Simple Guide

How to install the K8s cluster manually using CentOS7?